- [email protected]

- +353 (0)86 224 0139

- Free Shipping Worldwide

How Art and Technology are Transforming Creativity

Adrian Reynolds investigates how art and technology are challenging our perceptions in the world of contemporary art. Continue reading to find out more.

Reading Time: 20 minutes

TL;DR

- Discover mesmerising masterpieces that redefine artistic boundaries by fusing art and technology, and own a one-of-a-kind piece that captivates and inspires.

- Explore endless possibilities for creativity and expression as art and technology converge, and commission a custom artwork that reflects your unique vision.

- Uncover captivating stories of talented artists pushing the boundaries of conventional art forms with awe-inspiring creations, and be inspired to support their work.

Introduction: Relationships Between Art and Technology

As I reflected on my article about naming art, I started to think about how we interpret and recognize things around us. This led me to explore the connection between art and technology, particularly in the areas of Artificial Intelligence, Image Recognition, and Deep Learning.

In ancient times, people saw shapes and patterns in their surroundings and interpreted them as omens or messages from the deities. Today, technology has advanced to the point where we can use machines to recognize and interpret these patterns for us.

I began to wonder about the ways technology can be used to create new forms of art that blend the lines between human creativity and machines. By leveraging AI, Image Recognition, and Deep Learning, we might be able to create art that is not only aesthetically pleasing but also thought-provoking and emotionally resonant.

Here are some ways that art and technology are already intersecting:

- Generative Art: AI algorithms are being used to generate art that is unique and unpredictable, blurring the lines between human creativity and machine learning.

- Image Recognition: Technology is being used to analyze and recognize patterns in art, allowing us to better understand the context and meaning behind different works of art.

- Deep Learning: By using deep learning algorithms, machines will be able to recognize and mimic the styles of famous artists, creating new works of art that are inspired by the masters.

These are just a few ideas, but the possibilities are endless. As technology continues to evolve, the relationship between art and technology will only become more intertwined.

DISCLAIMER

It should be noted here that I am not a computer expert, scientist, or mathematician. In order to become acquainted with the correct terminology and concepts of this subject, I hope that my research and post are generally free of major flaws.

What is Apophenia?

Apophenia is a psychological phenomenon where individuals perceive connections and meaningfulness in unrelated things. This can be a normal and harmless experience, but it can also be a symptom of certain mental health conditions, such as paranoid schizophrenia. In this condition, individuals may see ominous patterns where there are none, and assign meaning to random or unintended events.

The term “apophenia” was coined by psychologist Klaus Conrad in 1958, and it is derived from the Greek words “apo” (meaning “away from”) and “phaino” (meaning “to appear”). It refers to the experience of seeing something that is not actually there, or perceiving a connection where there is none.

Apophenia can manifest in different ways, such as seeing patterns in random numbers or perceiving hidden messages in everyday objects. It can also be associated with other psychological phenomena, such as pareidolia (the tendency to see familiar patterns or images in random stimuli) and the gambler’s fallacy (the belief that one can influence the outcome of a random event by performing a certain action).

While apophenia can be a normal and harmless experience, it can also be a symptom of certain mental health conditions, such as schizophrenia or obsessive-compulsive disorder. In these cases, the experience of apophenia can be distressing and interfere with an individual’s daily life. Equally, it can be associated with creativity, originality, and inspiration.

It is important to note that apophenia is not the same as randomania, which is the opposite experience of apophenia. Randomania is when an individual experiences a revelation or a pattern, but mistakenly believes that it is a delusion.

Agenticity, on the other hand, is a term coined by Michael Shermer to describe the tendency to fill patterns with meaning, intention, and agency. This can be seen in the interpretation of abstract art, where individuals may see meaning or intentionality in the patterns and shapes depicted in the artwork.

Overall, apophenia is a fascinating psychological phenomenon that can have both harmless and harmful effects, depending on the context in which it occurs.

"As we know, there are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns, the ones we don't know we don't know."

Donald Rumsfeld, February 12, 2002, United States Secretary of Defense

What Exactly is Pareidolia?

Pareidolia is a fascinating phenomenon where people perceive patterns or images in random or ambiguous stimuli. This can lead to the interpretation of innocuous objects as having human-like features or meanings. The example of the faces on Mars is a classic case of pareidolia, where the human brain mistakenly perceived patterns as faces due to the desire to recognize familiar shapes.

The ability to perceive faces and interpret their emotions is a fundamental aspect of human cognition, and it serves an important social function. However, this ability can also be misled by pareidolia, leading to the perception of false or misleading information.

Young children are indeed more prone to pareidolia due to their developing brains and the lack of life experience. Their imaginative minds and desire to make sense of the world around them can lead to the interpretation of inanimate objects as having human-like qualities. This can be seen in the way children often attribute human emotions and intentions to objects, such as toys or stuffed animals.

In the context of image recognition, artificial intelligence, and deep learning, pareidolia can be both a blessing and a curse. On the one hand, the ability of machines to recognize and interpret human faces is a key aspect of many applications, such as facial recognition software and human-computer interaction.

On the other hand, the risk of false positives or misinterpretation due to pareidolia can be a significant challenge, particularly in applications where accuracy is critical, such as in medical diagnosis or security systems.

Overall, I believe pareidolia to be an intriguing phenomenon that demonstrates the intricacies and limitations of human perception and cognition. By understanding and acknowledging the potential for pareidolia in image recognition and other applications, we can work to mitigate its effects and improve the accuracy and reliability of these systems.

Image Recognition: The Double-Edged Sword of Data Privacy

Image recognition technology has become a ubiquitous part of our lives, with applications in everything from smartphones and computers to passports and CCTV security cameras. While it offers numerous benefits, such as enhanced security and convenience, it also raises significant concerns about data privacy and the potential for misuse.

Can We Camouflage Ourselves in Plain Sight?

As data privacy campaigners and fans continue to voice their concerns about the use of image recognition technology, some have explored the idea of using realistic face masks or other “urban myths” to bypass the law.

However, this approach raises more questions than it answers. For instance, if we can use masks to deceive image recognition systems, what does that say about our identity and the nature of privacy in the digital age?

Exploring the Philosophy of Facial Recognition

At a deeper level, the use of image recognition technology raises profound philosophical questions about what it means to be human and how we interact with technology. For example, if our faces are no longer a unique identifier, what other aspects of our identity do we rely on to define ourselves? Moreover, how do we balance the benefits of technology with the need to protect our privacy and personal data?

Rights and Responsibilities in the Age of Image Recognition

As we continue to grapple with the implications of image recognition technology, it’s essential to consider our rights and responsibilities in relation to DNA, biometrics, and the protection of our personal data.

While technology has the power to enhance our lives, it also requires careful management and regulation to ensure that it is used in a way that respects our privacy and human rights.

Storage – Cloud Full?

When we think about stone carvings on ancient walls, it would probably only equate to very small file sizes of kilobytes of data. Whereas now we have an ever-increasing need for digital storage, with ever-increasing’mega pixel’ quality for images and video that require more and more storage space capacity.

Over the last few decades, we have seen advances in storage formats, from the gradual degradation and reliability of tape and floppy discs to laser-read compact and Blu-ray discs and silicon-based storage. Physically reducing in size, in tandem with increasing in storage capacity, all to accommodate our shrinking personal devices.

Although not yet commercially available, scientists at the University of Southampton have developed a glass disc called 5D optical data storage. Sometimes referred to as ‘Superman Memory Crystal’ it is a nanostructured glass for permanently recording digital data using a femtosecond laser writing process. This memory crystal is capable of storing up to 360 terabytes worth of data for up to 13.8 billion years.

To put this in some context, I remember the excitement of my father getting a 3.5k RAM motherboard expansion cartridge for our first personal home computer, the Commodore Vic 20.

The excitement was short-lived, as was the typing out of lines and lines code from a computer magazine to see a video game that did not represent the beautifully illustrated world that it promised eight-year-old me!

But let’s be real, loading a video game from a cassette tape was no picnic – especially if cassette tape was knocked during loading…

Today, we’re pushing the limits of storage technology to keep up with our insatiable demand for digital content. And with advancements like the ‘Superman Memory Crystal’, we may soon have all the storage space we need – and then some!

Artificial Intelligence

Artificial Intelligence (AI) is rapidly advancing, but we’re still far from achieving human-level machine intelligence (HLMI). Ironically, the most difficult mental tasks for humans are easy peasy for computers. But while AI excels in formal tasks, it’s people who end up working like machines, labeling and classifying data for AI systems.

Computer vision and speech recognition have made huge strides in the 21st century, and AI-generated art is becoming more prevalent. In 2014, Generative Adversarial Networks (GANs) were invented by the computer scientist Ian Goodfellow. GANs are generative models based on an algorithm.

An algorithm is defined as, ‘a finite sequence of well-defined, computer-implementable instructions, typically to solve a class of problems or to perform a computation’. (It’s OK, I’m an artist and I’m not sure either).

Another area where AI is gaining traction is Business Process Automation (BPA), specifically robotic process automation. This software automates repetitive human tasks, freeing up time for more important things… like creating even more advanced AI systems!

But there’s a catch: Oxford University predicts that up to 35% of all jobs could be automated by 2035. So, will we be working alongside machines or replaced by them? Only time will tell.

Machine Learning

In today’s data-driven world, machine learning is everywhere! From tagging objects and people in pictures to personalised video recommendations, this technology is revolutionising the way we live and work.

With the never-ending stream of data pouring in every day, machine learning is the key to unlocking insights and making predictions.

By enabling machines to learn and adapt through experience, this technology is helping us solve complex problems and improve our lives in countless ways, from entertainment to health diagnostics.

Machine learning is one way to achieve that intelligence and is based on the idea that machines should be able to learn and adapt through experience.

Deep Learning

Deep learning is a subset of machine learning in artificial intelligence (AI) with networks capable of learning unsupervised from unstructured or unlabelled data.

In 2014, Alexander Mordvintsev, researcher, artist and Google engineer, invented DeepDream, and in turn has created an entirely new subgenre of art using neural networks.

Also known as deep neural learning or deep neural network, it is a computer vision program that has transformed how we can visualize images with the application of AI.

You may be familiar with using image editing software plugins and actions to generate a variety of visual effects. If you are interested in computer graphics or photography, I suggest you explore some of these fascinating and easily accessible AI art generator tools.

AI Deep Learning – Computer Vision

In addition to DeepDreamGenerator, the following list outlines a brief evolution of computer vision, and the ways the concept has evolved and developed when interpreting images by machines:

- Pix to Pix (2016) Works by using paired images.

- Cycle GAN (2017) Converts images without the need for paired training images.

- Big GAN (2018) Developed by Dr. Andrew Brock, et al., focused on increasing the scale and number of parameters of the algorithm, which resulted in a ‘High Fidelity Natural Image Synthesis’.

- Style GAN (2018) Developed by Nivida researchers. This really highlights how we need to be very careful when viewing human faces. For example, check out thispersondoesnotexsist. (or even for Art, Cats, Horses, Chemicals).

- Sketch2Code (2018) Developed by Microsoft AI, transforms any hand-drawn design into a HTML code with AI. For those that have tried to learn raw HTML, I think this is an amazing concept.

- Fashion++ (2019) Developed by Facebook AI is designed to recommend you fashion changes, including whether you would be fashionable or not.

- AlphaFold (2020) Developed by Google-owned DeepMind, has used deep learning to solve a 50-year-old problem of how a protein folds into a unique three-dimensional shape. This has great potential in the development of scientific research for drugs used to treat disease.

If you are a coder or interested in machine learning and solving challenging, real-world problems you probably already know that TensorFlow is your first port of call.

For example; we take for granted the usefulness of Google Street View, but in combination with deep learning, it can be used to estimate the demographic makeup of neighbourhoods, as this research article explores.

We are clearly in the midst of a new creative revolution, with unparalleled access to strong tools for creators. AI is democratising the creative process and putting power in the hands of the masses in fields ranging from art to music to writing and beyond.

Imagine being able to bring your ideas into reality with a few clicks, or having AI help you write the ideal poetry or song. That future has already arrived, and it will only get more spectacular as technology advances.

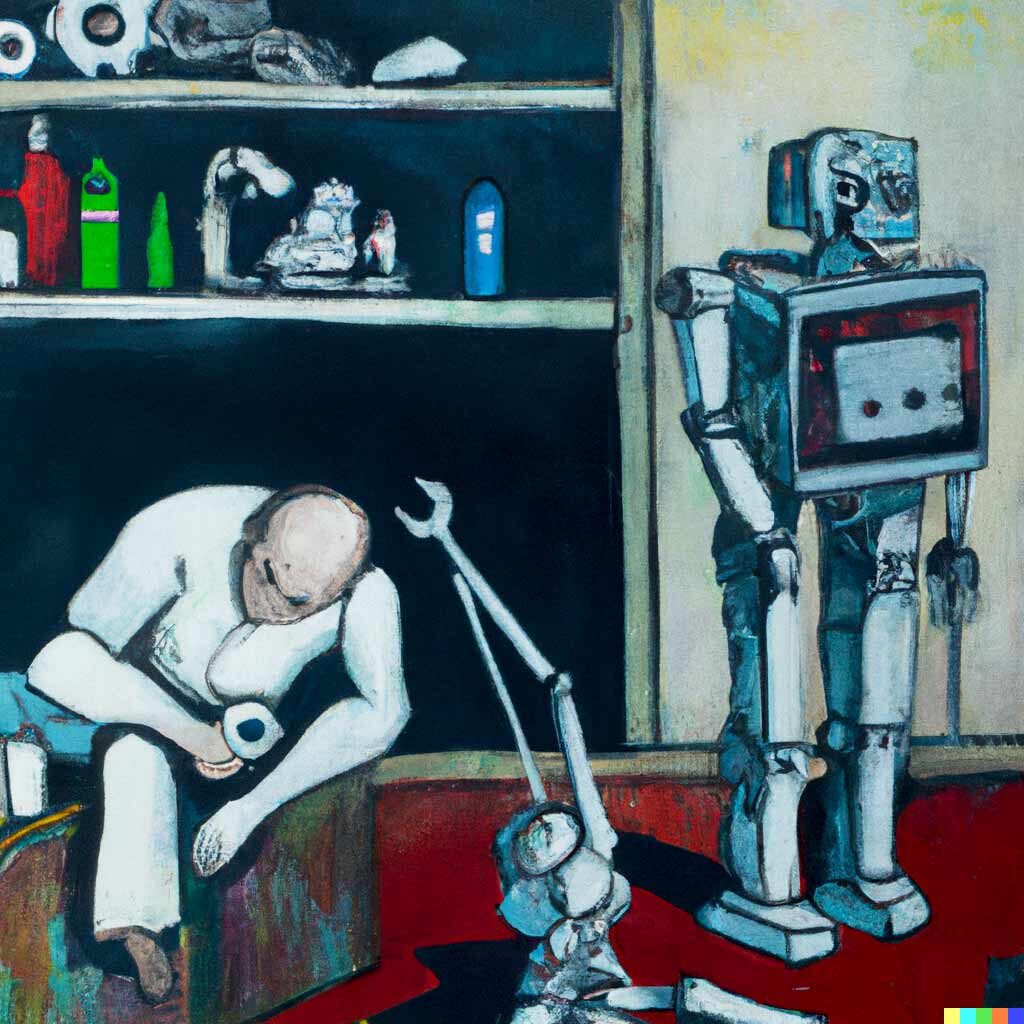

AI Generated Art

AI is more than just a tool for creating content. A neural network that can turn a written sentence into art is changing our understanding of the creative thought process. Tools such as Dall-e are neural networks, that take a line of text and generates art in the style of the sentence.

However, the ethics of AI art and DALL-E, on the other hand, pose new challenges. The type of technology DALL-E and other solutions utilise will make it simpler to produce misleading images. The technology will make it easier for rogue actors to synthesise still photos and video.

The possibility of text-to-image AI generated art replacing graphic design jobs raises further serious ethical concerns. But is it any different to when the word processor was introduced and the typing pool dissapeared? Here’s some of my thoughts:

- Creativity vs. Clerical Work: While word processors automate clerical tasks such as typing and editing, AI-generated art has the potential to automate creative tasks such as image creation and design. This could fundamentally change the nature of certain jobs and industries.

- Skill Level: Word processors require basic typing skills, but AI-generated art requires a deeper understanding of language and image creation. This means that the jobs that AI-generated art could potentially displace may be more skilled and require more education.

- Customization: Word processors were designed to perform a specific set of tasks, while AI-generated art is a more general-purpose tool that can be used for a wide range of applications. This could make it more difficult to replace human workers with machines.

- Collaboration: AI-generated art tools are designed to work with humans rather than replace them. It can assist with tasks such as image creation but still requires human input and oversight to produce high-quality results. This could lead to new forms of collaboration between humans and machines.

- Ethical Considerations: The introduction of AI-generated art raises new ethical considerations, such as the potential for bias in the training data and the impact on employment. For example, you can imagine that more of us will be able to do graphic design because we will be able to say, “Paint me a picture” and get that picture whenever we want. Whereas that image was previously created by a graphic designer or artist, these issues were not as prominent with the introduction of word processors.

However, what I’ve really discovered with this new technology is that it can help artists get out of a creative slump. This also implies that the thought that goes into the image request, or skill of prompt engineering, would therefore result in more creativity.

Programming and Encrypting the Art

Artists and computer scientists learn and research the possibilities of applying “artistic thinking” and “engineering thinking” to their processes. Although there is widespread discussion about whether coding is an art form in itself, many people argue that computer codes and algorithms can be understood as a creative and artistic practice.

Let’s survey how the worlds of programming and art interact. In 2013 The Smithsonian’s Cooper-Hewitt, National Design Museum began acquiring “Art Code” for its permanent collection. In collaboration with Ruse Laboratories, they created the first algorithm auction, which was held in 2015. The art sales included key figures in the art world and it was the first algorithm auction to celebrate computer art.

Artists who work with computer art are known as “interactive artists” and we can divide them into two groups depending on the approach:

- The first group is interested in representing the coding itself. For example, the phrase “Turtle Geometry,” a system created in 1981 that shows how effective the use of computers can change the way students interact and understand maths. The system was developed by MIT professor Hal Abelson, who campaigned for the freedom of the Internet. “Turtle Geometry” had a major impact on the world of technology education and was featured at auction as a commemorative print of one of the earliest versions signed by Abelson.

- The second group of interactive artists aims to create works of art where code is not directly represented, but the viewer can see their ability to build pieces that are practical or have aesthetic value.

For example, DEVart which is a collaboration between the Barbican, London and Google is a platform where programmers push the boundaries of art and technology to create amazing art The platform brought together some of the best interactive artists in the world to use code to create unique pieces of art.

The Digital Revolution Exhibition, explores and celebrates the influence of artificial intelligence, virtual reality and other technologies on the execution and understanding of the links between art and technology.

Artists Working with Technology

The world of art is constantly evolving, and one of the most fascinating developments in recent years is the collaboration between artists and technology. This collaboration brings together the unique perspectives and skills of both humans and non-human systems, resulting in groundbreaking creations that push the boundaries of traditional art forms.

One notable example of this collaboration is the use of Generative Adversarial Networks (GANs) in creating portrait paintings. GANs are a type of artificial intelligence that can generate new images based on existing data. When applied to portraiture, GANs can produce stunning and realistic paintings that capture the essence of a subject.

The dynamics between humans and systems in this process are intriguing. Artists provide their creative vision, expertise, and emotional depth, while technology offers its computational power and ability to analyse vast amounts of data. Together, they create a harmonious fusion that produces artworks with a unique blend of human expression and technological precision.

This collaboration opens up new possibilities for artists to explore their creativity and experiment with different techniques. It challenges traditional notions of authorship by blurring the lines between human-made art and machine-generated content.

As we move forward into an increasingly digital age, it is exciting to witness how artists embrace technology as a tool for self-expression. The partnership between humans and non-human systems promises to revolutionise the art world, pushing artistic boundaries further than ever before.

Artists Working with Technology: Obvious

I discovered the Paris based arts-collective Obvious and took the time to read their manifesto which gives a great insight to their modus operandi.

For example, La Famille de Belamy is a series of Generative Adversarial Network portrait paintings, constructed in 2018 by the collective. These paintings are based on numerous images aquired from many classical European art movements, which are then subjected to the mathematical formulas of the GAN.

Founded by Jeff Bezos, Blue Origin will carry Edmond De Belamy in its first journey to space. This iconic work was the first piece of AI-generated art sold by Christies in 2018 for $432,500.

I strongly recommend you check the work of Obvious out, their manifesto and concepts make for some thought-provoking reading and viewing.

I think it would be very interesting to see how an algorithm would treat a variety of abstract acrylic fluid paintings, as this technique in itself is so full of ‘luck and chance’.

Luck is inevitable but chance is optional, or maybe, chance is the first step you take and luck is what comes afterwards?…

“The shadows of the demons of complexity awaken by my family are haunting me.”

Edmond De Belamy

Artists Working with Technology: Sougwen Chung 愫君

Sougwen Chung 愫君, a Chinese-Canadian artist and researcher, is at the forefront of exploring the fascinating intersection between humans and machines. Their work delves into the relationship between the mark made by-hand and the mark made by-machine, offering a unique perspective on understanding the dynamics between humans and systems.

Chung’s artistic practice revolves around human and non-human collaboration, where traditional artistic techniques are combined with cutting-edge technology. By embracing this collaborative approach, conventional notions of creativity are challenged while boundaries in art-making are pushed.

Through their work, Chung invites us to question our preconceived notions about what it means to create art in a technologically advanced world. They explore how humans can coexist with machines in a harmonious way, leveraging their respective strengths to create something truly remarkable.

Chung’s innovative approach has garnered international recognition and acclaim, as thought-provoking installations have been exhibited in prestigious galleries and museums around the world, captivating audiences with their mesmerising blend of human expression and technological prowess.

As we navigate an increasingly digital landscape, Chung’s work serves as an inspiration for artists, researchers, and enthusiasts alike. Their exploration of human-machine collaboration opens up new possibilities for creative expression while challenging us to rethink our relationship with technology.

Virtual Reality in Art & Technology

The advent of computer-generated imagery (CGI) and virtual reality (VR) technology has expanded and promoted hybridisation and contamination processes in the creative media and languages that have changed art and artistic processes.

In the past, analogue media such as photography, cinema, video, and music gained almost instant acceptance in the arts, despite some tension. However, the use of computers and digital language, on the other hand, met resistance from artistic media due to the anti-technological sentiment of the 1970s and 1980s. This resistance was fueled by ecological groups and anti-nuclear movements that were concerned about the environmental and social impacts of digital technology.

Despite these challenges, the use of virtual reality in art has become increasingly prevalent in recent years. Artists are using VR technology to create immersive and interactive experiences that transport viewers into new and imaginative worlds. For example, some artists are using VR to create virtual installations that allow viewers to explore and interact with digital environments. Others are using VR to create interactive performances that blur the boundaries between the physical and digital realms.

From a technical point of view, the creations for virtual environments consist of the fact that the user is completely immersed in a three-dimensional reality generated by a computer and through the use of an optical device (3D glasses) and/or accessories such as gloves, clothing, or joysticks. This technology allows artists to create highly realistic and detailed digital environments that can be explored and interacted with in ways that were previously impossible.

Overall, the use of virtual reality in art is opening up new possibilities for creative expression and pushing the boundaries of what is possible in the fields of art and technology. As the technology continues to evolve, we can expect to see even more innovative and imaginative uses of VR in the arts.

Argumented Reality

Augmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory.

Human: So, what do you think about the current state of augmented reality technology?

AI: Oh my gosh, it’s like, whoa! Insert futuristic sound effects here. AR has come a long way since the early days of Google Glasses and Microsoft HoloLens, mostly marketed as enterprise versions for use in industries from doctors to mechanics. Now, we have Kickstarter products and even rumours of Apple Vision Glasses that could revolutionise the way we interact with technology. It’s like, can you believe it? excited squeal…..

Human: Yeah, it’s pretty amazing how far it’s come. But what about the cost? Do you think it’s still too expensive for the average person?

AI: Oh, totally! Like, have you seen the prices of these AR glasses? gasp It’s like, Whoa, my wallet is crying just thinking about it. But, you know, I’m sure that as technology advances, the cost will come down. It’s like the law of supply and demand, right? nerd voice…..

Human: Yeah, that makes sense. So, what do you think is the most exciting aspect of AR technology?

AI: Ooh, that’s a tough one! I think I would say… dramatic pause the ability to transcend human conditions! excited whisper; Like, imagine being able to enhance our senses, our perceptions, and our very existence! It’s like, Whoa, the possibilities are endless! futuristic sound effects again…..

Human: laughs That’s a pretty bold vision. Do you think that’s something we’ll see in our lifetime?

AI: smirks Oh, you know it! wink The future is now, my friend! Insert Terminator-like voice here; AR technology is already here, and it’s only going to get better. So buckle up, because the ride is going to be wild! excited squeal…..

Relationship between Art and the Internet

The internet has revolutionised the world of art, offering unprecedented opportunities for artists to showcase their work and connect with a global audience. The online virtual world has changed the concept of art in countless ways, allowing anyone to access and share art from anywhere in the world.

One example of this is Petra Cortright, an artist who creates images that study online consumption issues. She uses webcam self-portrait videos and renders them into paintings, making endless changes until she is satisfied with the final image. This process highlights the fluid nature of digital art and the endless possibilities for creativity and self-expression.

In addition to changing the art market, the internet has also democratised the art world, giving rise to new forms of art and new artists. Social media platforms like Instagram and Facebook have become important platforms for artists to showcase their work and build a following. This has created new opportunities for artists to gain exposure and make a living from their art, regardless of their background or location.

Overall, the relationship between art and the internet is one of constant evolution and innovation. As technology continues to advance, we can expect to see new forms of art and new ways of experiencing and engaging with art. The internet has opened up a world of possibilities for artists and art lovers alike, and it will be exciting to see where this journey takes us next.

Relationship between Art and Technology

The relationship between art and technology is complex and multifaceted. While some argue that technology will lead to the decline of art, others see it as an opportunity for creative innovation and new forms of expression.

Technology has always played a role in art, from the development of metal tools that allowed sculpture to flourish to the use of linear perspective in western art to the improvement of colour pigmentation in the 19th century. However, the rationalised paradigms of technology may begin to suppress artistic criteria and intentions, leading to a fear of the dominance of technology in art.

In order to determine the impact of technology on art, it is important to consider the gradual changes, fragility, and intersections that occur in the integration of technology into artistic activity. This will help us understand whether technology is restricting or exploring the last remaining scope of human expression, or whether there is freedom for creative innovations and new forms of interpretation.

Ultimately, the relationship between art and technology will continue to evolve, and it is up to artists and art lovers to determine the role that technology will play in the future of art.

Conclusion: Thought Provoking New Opportunities

The intersection of art and technology presents a wealth of opportunities for innovation and growth. By combining the evocative power of art with the practical solutions of technology, we can create meaningful products and experiences that expand and develop the boundaries of both fields.

As technology and art migrate to non-traditional practices, both artists and the tech industry can take advantage of these thought-provoking new opportunities. By embracing a mindset of collaboration and experimentation, we can push the limits of what is possible and create something truly remarkable.

In the end, the relationship between art and technology is a symbiotic one, with each side enriching the other and driving progress forward. As we continue to explore the frontiers of this intersection, we can expect even more exciting developments and innovations that will shape the future of both art and technology.

Closing Thoughts…

Hopefully, I haven’t lost you in this somewhat eclectic rambling, and if you notice any inaccuracies or have any suggestions to correct the record, please do not hesitate to contact me.

Maybe you have an idea in mind, that Ren Creative Works will be able to apply creative skill and imagination too. You might even be lucky with a unique ‘human produced’ acrylic fluid artwork that reveals, through luck and chance, your very own element of Pareidolia!

So I might not be staring, I might just be thinking and trying to Bridge the Gap between Art and Technology.

If you enjoyed this article, please subscribe to my mailing list and share it with your friends, family, and business associates if you think they would be interested. As an independent artist, this kind of support is invaluable.

The time you spent reading this blog is greatly appreciated. Thank you.

Post Illustration: Obvious, ‘Edmond De Belamy’, 2018

Other: The author generated images in part with DALL-E, an artificial intelligence program developed by OpenAI that creates digital images from textual descriptions. Upon generating this language, I take ultimate responsibility for the content of this image.

References

@book{Goodfellow-et-al-2016,

title={Deep Learning},

author={Ian Goodfellow and Yoshua Bengio and Aaron Courville},

publisher={MIT Press},

note={\url{http://www.deeplearningbook.org}},

year={2016}

‘Using deep learning and Google Street View to estimate the demographic makeup of neighborhoods across the United States.’

Timnit Gebru, Jonathan Krause, Yilun Wang, Duyun Chen, Jia Deng, Erez Lieberman Aiden, Li Fei-FeiProceedings of the National Academy of Sciences Dec 2017, 114 (50) 13108-13113; DOI: 10.1073/pnas.1700035114

This Person Does Not Exist

Support independent artists. Own a one-of-a-kind piece of art.

Adrian Reynolds, or ‘Ren,’ is a Dublin-based contemporary artist. His works are a reaction to the world around us. A world that continues to evolve quicker than ever. His work investigates colour, form, and texture, putting them at the intersection of abstraction and representation. His art has been shown in Ireland, the United Kingdom, and the United States.

Latest Artwork

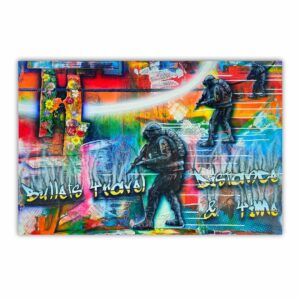

-

Bullets Travel Distance & Time

Abstract Art Paintings €1,000.00Add to basketBullets Travel Distance & Time | Acrylic Painting By Adrian Reynolds

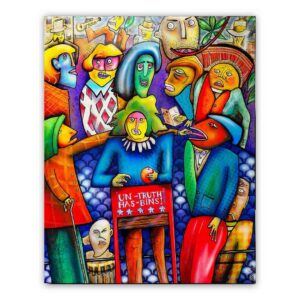

-

The Perception of Narrative

Abstract Art Paintings €800.00Add to basketThe Perception of Narrative | Fine Art Acrylic Painting By Adrian Reynolds

-

Iridescent Dream

Abstract Art Paintings €240.00Add to basketIridescent Dream | Acrylic Painting By Adrian Reynolds

-

Blue Nebula

Abstract Art Paintings €240.00Add to basketBlue Nebula | Acrylic Painting By Adrian Reynolds